top of page

Vive

VR experiences and SDK

Role: Concept Vision, Research, IA Flows, UX, UI, Storyboards, 3D Assets for build, Tech Demo

Responsibility: Research, IA flows, Concept Designs, 3D Asset creation, UX presentations, Oversee development of Demo in Unity

Process: Research, Sketch, Iterate Solutions, Create Builds, Support Developers

Solution/Result: HTC increased headset camera passthrough resolution to allow this experience and others like it to be fully realized.

This was a conceptual VR Experience. The project to create Lego models in VR and to create a overlay instructional experience in AR. The scope of this project was not only to create fun experiences, but to create an eventual sdk for Vive AR for future experiences. Lego bricks provided a unique opportunity to explore a wide range of experiences and solve some technical issues with Vive's VR hardware.

For the first phase of the project, I needed to collect data on user needs for the product and create profiles of the core users. Since Lego in of itself is a successful product, there needed to be a real user need or problem for VR/AR to solve.

The application consisted of a large multi modal icon with a drawer that would slide out containing additional functionality. The main app icon would indicate if the user was recording or broadcasting their game screen.

My initial concept was for an enhanced way to play with Lego bricks, or build models in VR. This required new methods of interaction. To be successful, the HMD cameras would need to recognize user gestures, and provide a library of lego bricks, as well as the multiple ways they could be interacted with.

Project Spec and Proposals

I decided to use AR and VR combined with Lego for two experiences. Experience (1) would focus on instructional help. The idea was to create a holographic style set of build instructions that would appear next to or over the build as it was being created. This would guide the user on how to build a model without the need to paper instructions of turning pages. Experience (2) focused on play, after the model was finished. This would be single play with the model and Digital assets, as well as multiplayer play remotely with several models.

Once I finished with the project scope, I created proposals for both concepts, back-up with research and proposals for each experience. The end result would then be a SDK from Vive that we would provide to partners, allowing them to create their own experiences for interaction with objects that build or connect with one another.

Storyboards

For the next phase, I created storyboards of several interactions that we could choose to develop and research. One of the experience was a way to use hand gestures to build with lego bricks in VR (see below)

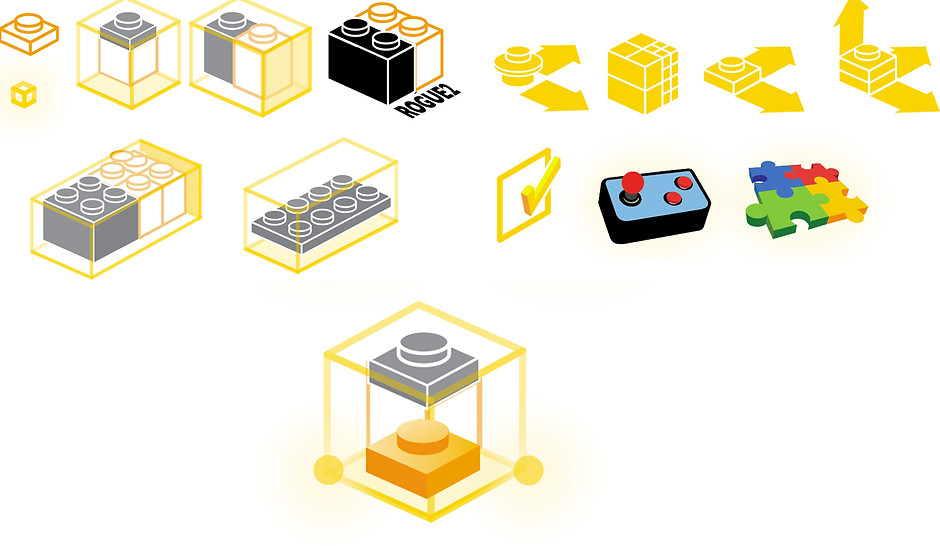

Additionally, I needed to create temporary assets for the visual UI for the demo. Both for iconography and interaction in AR/VR.

In developing the experience, we discovered that the Vive pass through cameras were too low res to be able to recognize small objects like legos. At this point we needed to change the scope, and concentrate on using AR/VR for gameplay with the final models. The passthrough cameras at High res were able to recognize a competed model quite easily. I settled on creating a set of instructional overlays that acted like a virtual booklet, but didn't require fully syncing with the users build of the model. Once a user had large enough portions of the model built, the cameras could recognize the assembled components, and provide digital overlays for final assembly.

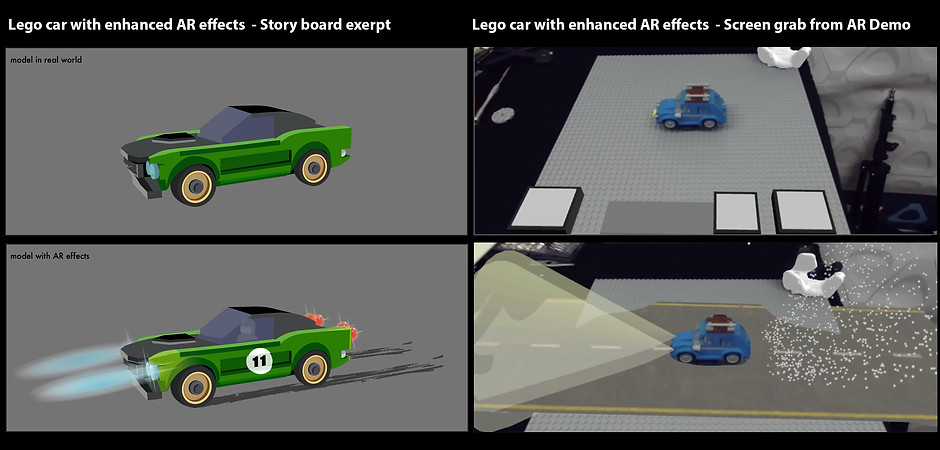

Starting the Demo: Exploring the Technology

I created an additional concept experience for a static lego build or other desktop display model. The idea was to provide gameplay that didn't require interaction with the actual model (though, portions of the model could be mapped with interactive controls as well). The experience created environmental effects over a stain model. Animated roads, dust, weather effects, lights and smoke could be created around a model to give the illusion of action. I also played around with creating mapped buttons in the real world that would mimic the function of the virtual buttons. This consisted of large buttons or sliders made from lego bricks. The idea was to give the user a tactile sense when interacting with the controls. They could slide a lego slider, tap a lego button with a spring, and feel the real surface move. But all the interactions were mapped to the object and created in AR.

Tech Demo (1). Interactive Car

Anchor 1

AR Demo for Lego Assembly

A big part of the experience was the final assembly of the model with AR augmented instructions. This video show the large components with AR animations able to recognize the main component, and show where to add the completed pieces to the larger model.

After completing the build, the completed lego model could then be used in gameplay. In this case, the environment/room around the user is turned into a field of space. Asteroids surround the player and they can shoot lasers from their "real" lego model towards the asteroids. Their lego model would also get damaged and emit smoke if hit by one of the digital asteroids. This could be played with the surrounding roo

We could also remap the players environment as well, surrounding them in space with a animated star field. The real model they assembled would still be visible in their hands, as we mapped the environment around it. This would enable the user to play in either a immersed s environment, or in a environment they created on the table top using additional models.

AR Demo for Lego Play with Full Environment

I was paired with a great development partner, nd hew was able to take the 3D assets and build working demos in AR my storyboards and spec. At this point I created 3D assets for the models and interactions. I crafted 3D versions of all the LEGO models we used, as the software would use the data in the 3D model to recognize and map it's real world equivalent. This also allowed us to create some interesting VR first person flight experiences. Iii this experiences, a user would be able to see or pilot a model that was being moved around by another use. It also allowed us to create a multiplayer experience for play with the models.

AR Demo for Lego Build and Multiplayer Play

A big aspect of this demo was to experiment with multiplayer experiences. I wanted to create options for virtual players as well as having several players in the same room.

For the demo, we created an experience with two players in the same room, with AR overlays. The two users could work on the same project, sharing parts and each working on a singular build. After completion, each player could use similar models that would have themes controls and overlay graphics mapped onto them. Using the controllers/spacecraft, the players could mimic a dogfight, with damage overlaid onto their respective controllers/space craft.

Additionally, it was possible to virtually place one of the players into the ship held by the there player. this provided for an additional experience of one player directly manipulation the experience of the other.

Results

The Vive LEGO experience exposed a need for higher resolution passthrough video hardware. In order to provide the best experience, the cameras need to distinguish between small parts and show the surfaces clearly to the user.

Reaction

4k passthrough video is now a feature being explored for future Vive products

bottom of page